Final Fact Sheet

Over the course of three years in the V4Design research project many technical innovations were developed. This page concludes on the main achievements and novelties that were published within the V4Design project in the form of a final fact sheet.

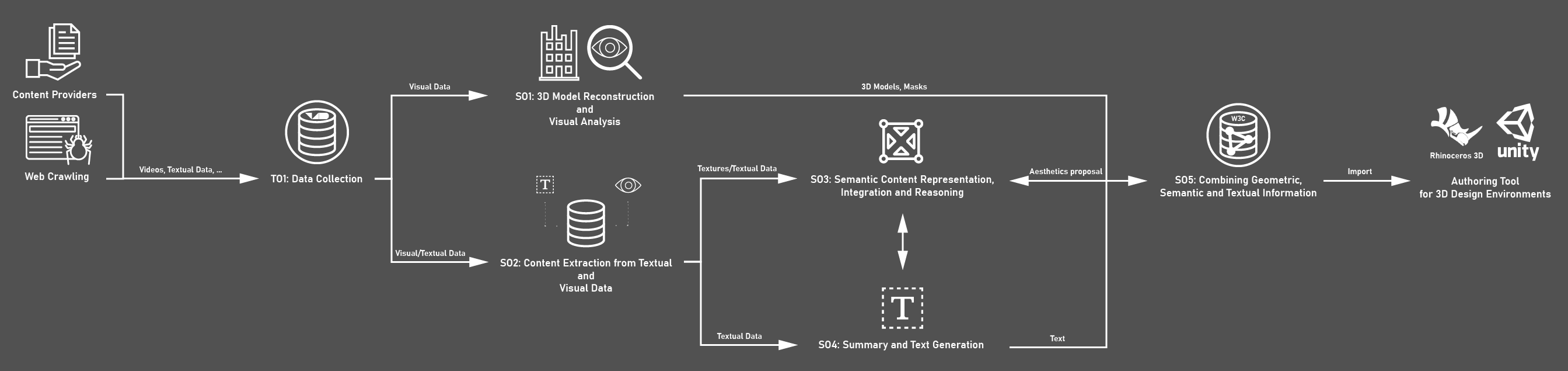

Nowadays large amounts of visual and textual data are generated, which are of great interest to architects and video game designers, such as archival footage, documentaries or movies. In their current form, it is difficult to be reused and repurposed for game creation, architecture, and design. To bridge this gap, V4Design develops technologies and tools that allow for automatic content analysis and seamless transformation to assist the creative industries in sharing content and maximize its exploitation. The conventional analogue prototype used by architects and video game designers include scale models and physical demo environments, such as rooms and apartments. V4Design helps to produce those prototypes faster and more cost efficient than the conventional methods. The differentiating factor for V4Design is the data collection and analysis processes, along with the sophisticated solutions for semantically representing, aggregating, and combining annotations coming from visual and textual analysis of digital content. The aim is to structure and link data in such a way to facilitate the systematic process, integration and organization of information and establish innovative value chains and end-user applications. Starting from the collection of visual and textual content (TO1) from content providers and online sources (web crawling), innovative design tools and workflows have been implemented that leverage visual and textual ICT technologies. More specifically, innovative 3D model reconstruction techniques are applied on the visual content (videos and images) to extract 3D assets of interest (SO1), such as buildings and objects. Computer vision and text analysis solutions further process the content to extract annotations (SO2) and dynamically enrich the generated 3D models with information, such as aesthetics, entities, BIM, categories, opinions from online reviews and critiques (SO3). At the end of the workflow, all the available information is semantically interlinked into rich knowledge graphs, offering advanced indexing and retrieval capabilities, while text generation is used to create multilingual summaries of the assets and assist end users in consuming the information (SO4). All information from multimodal components are interconnected following the semantic web format (RDF graphs) and interlinked with Linked Data using semantic queries, composing a rich Knowledge Base with intelligent retrieval capabilities (SO5). Finally, everything is embedded into common 3D Design Environments such as Rhinoceros 3D and Unity through the V4Design authoring tools V4D4Rhino and V4D4Unity. The whole development process was carefully reviewed and guided by our user partners based on pre-defined pilot use cases (PUCs) that represent real workflows (user requirements) of our target group. To learn more about the PUCs, please visit our “Showcase of the final results” webpage.

Innovations summary

Below you find a list that summarizes the key innovations and novelties archived in the V4Design project. The integration of all these innovations into the authoring tools was especially highlighted by the “European Commissions Innovation Radar” (https://v4design.eu/2021/02/02/v4design-on-european-commissions-innovation-radar/).

Interoperability and semantics:

A unified model have been designed according to the exact needs for representing the V4Design multimodal components information using the W3C recommendation Web Annotation Data Model, which as far as we know has not yet been used in creative industries domain, to promote interoperability and reusability. Existing schemata and ontologies, such as Building Topology Ontology (BOT), have also been used to inherit useful classes and relationships. Domain-specific semantic integration and reasoning rules have been designed based on the nature of the information that are extracted from the V4Design components. Further data enrichment is achieved exploiting the wealth of Linked Open Data (LOD), by extracting information that may be useful to the users and supporting even more the components functionalities. Using LOD, we assist in making richer descriptions (Text Generation) and offering an intelligent conceptually based semantic search to the users (V4Design tools).

Web crawling and retrieval:

While numerous solutions have been proposed for standalone data collection tasks such as web scraping or web search, none of them provides a complete system that can be applied on multiple and heterogeneous web resources. V4Design Crawler is a fully-fledged solution that is implemented to support all the required operations for collecting content from web and social media in a single module that maps the multi-modal results into a unified representation. It applies various techniques (e.g., scraping, retrieval from API) to integrate the most popular websites and platforms from which we are able to acquire free multimedia as well as the data from the content providers of the consortium.

Aesthetics extraction:

V4Design proposes a novel framework to extract aesthetics concepts out of images of architecture and paintings, as well as videos and movies to create an annotated aesthetics database. This database is used to create novel texture proposals for changing the created 3D models. Our innovation is on the combination of two different methodologies: we use VGG16 DCNNs pre-trained on a large collection of places, followed by its fine-tuning in two large, annotated painting datasets, called WikiArt and Pandora. In that way, we manage to use the same features that are used for scene recognition in distinguishing paintings to each other.

Style Transfer:

Style transfer is an aesthetics extraction technique that allows us to recreate the content of an image by adopting the extracted style of an image depicting a painting. In the reported period we propose a novel framework to transfer the style from an image collection to a content image while preserving content characteristics. To that end, we combined Cycle-Consistent Adversarial Networks and Fast Adaptive Bi-dimensional Empirical Mode Decomposition. The experiments reveal that the proposed method produces better qualitative and quantitative results than the State-of-the-Art.

Spatio-temporal building and object localisation:

The proposed framework aims to the localization of buildings, several architectural constructions (e.g., bridges, tunnels), building façade elements (e.g., walls, doors, windows) and objects of interest in video frames or images. The scene recognition module, beyond classifying the depicted scene into one of the selected categories (e.g., campus, building facade), characterizes the images or video frames as indoor or outdoor scenes. In this way, it helps further analysis of input routing it to the proper next module; outdoor scenes are further analysed by spatio-temporal building localization module, while indoor ones by the spatio-temporal object localization module. Our innovation is on the interaction with other components, where Spatio-temporal building and object localisation provides metadata that can help the end users in terms of 3D models’ retrieval through the V4Desing platform, along with masks which can assist the 3D reconstruction procedure by removing unwanted clutter and increasing dense reconstruction performance. For this purpose, deep learning models were trained and extended, using specialized datasets fully aligned with the V4Design’s scope.

Language analysis and generation:

Concept extraction is a highly demanded technology for several downstream applications, including, among others, language understanding, ontology population, semantic search, question answering, etc. However, advanced deep learning models have been used so far, first, for concept extraction in specialized (in particular, medical) discourse. For general discourse, straightforward single token/nominal chunk, concept alignment or dictionary lookup techniques (such as DBpedia Spotlight) still prevail. We propose generic open-domain OOV-oriented concept extraction based on distant supervision with neural sequence-to-sequence learning which produces the models that do not rely on external knowledge after training.

Sentiment analysis:

In V4Design, we are also interested in extracting opinions or sentiments conveyed in critics and review reports from the web related to the entities (e.g. buildings) that we expect to exist in the 3D asset dataset. To this end, we have developed a component for sentiment analysis that analyses critics and review reports at different points in time. The tool can handle noisy web-texts and detect attributes of opinion for different types of architectural sights being based on the state-of-the-art model for detection of emotions in short messages and on domain-independent concept detection model. No tool exists for the analysis of dynamically changed users’ opinions on architectural sights and their attributes.

3D model reconstruction:

Processing pre-existing video footage has two distinct challenges: 1. consecutive frames must be suitable for reconstruction i.e., sufficient displacement/overlap and 2. video from multiple angles must be separated and the overlapping parts must be processed individually. The automated video processing pipeline does just that through SHOT and GRIC evaluations and image batching the results. Additionally, potentially blurry frames are discarded, using blurriness detectors based on image gradients, SAD, and FAST key points. When handling medium to large sized image collection datasets the major challenge is the identification of images depicting the same scene and disregarding irrelevant images. Due to the high computation time of traditional feature matching methods, we have used an alternative method involving a pre-trained feature vocabulary to detect similar images in a much faster manner. By using the inputs of the style transfer module, we can restyle the texture of our models.

3D-modeling and BIM enhancement

The enhanced model extraction is deployed in V4Design as a separate, optional pipeline for further processing of 3D assets. It specifically targets assets containing building elements. This process can be subdivided into two major tasks. First, the input meshes are segmented into workable parts. Secondly, these subcomponents are labelled with a certain class label from a set of predefined classes. Segmentation is performed with the use of an octree-based region growing algorithm. This method is proven to be capable of processing a wide range of 3D models, including models generated from photogrammetry. Experiments run on 3D assets generated in V4Design show that region growing gives a proper segmentation of the scenes. Currently the enhanced 3D model extraction pipeline runs only for specific models requested by the user.

V4Design authoring tools:

During the project, the innovative aspect of the Architecture Authoring tool was evaluated and strengthened by design and implementation, notably during the second phase of the project, and based on interactions with the user groups and early adopters. Therefore, and considering the results of the market analysis conducted for architecture-related applications for the V4Design technologies, the innovation aspect of the Architecture Authoring tool and how it is understood, illustrated, and encapsulated has notably evolved. By itself, the main innovation aspect of the Architecture Authoring tool (consisting of the V4D4Rhino plugin and the Compute server) rests on its ability to integrate innovative services from other partners to add more value to the Rhino users, services such as 3D reconstruction and BIM extraction, among others. This accelerates notably the market penetration for these services as modular components of a more complete software suite for architecture, which includes Rhino, Grasshopper, and more than 900 plugins. The Virtual Reality Authoring tool consisted of two separate tools, the Unity 3D plugin and a VR App. The Unity plug in was supported to allow game developers create games by importing V4Design assets into their Unity 3D projects (as well as reconstruct new assets by uploading videos) and use the triggers developed as game mechanics for the game development process. The VR App was created to help the same developers or any developer of VR application to easily be inside VR to import and place 3D models and recreate the environment on-the-go to enhance the final experience for the user.