by Simon Mille, researcher at Pompeu Fabra University

The relation between Knowledge Graphs and Natural Language currently attracts a lot of interest within the scientific community, mainly under the form of two questions:

- How to extract meaningful Knowledge Graphs from texts written any Natural Language (e.g. English, Spanish, Greek, Hindi, Japanese, etc.)?

- How to produce well-formed informative texts in Natural Language from Knowledge Graphs?

In this short article, we provide details on the challenges related to these questions and connect them to what is being done in V4Design.

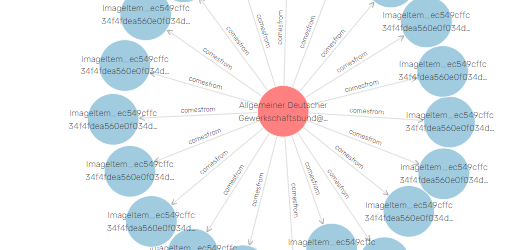

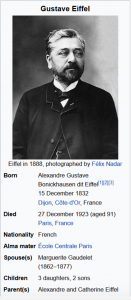

Nowadays, thanks to Semantic Web initiatives such as the W3C Linking Open Data Project,1 a tremendous amount of structured knowledge is publicly available as language-independent Knowledge Graphs. These Knowledge Graphs cover a large range of domains, under the form of billions of triples –Subject, Property, Object. The following table shows a Knowledge Graph consisting of six sample triples related to Gustave Eiffel, representing information about his birth, his death, his nationality and his professional activity.

Nowadays, thanks to Semantic Web initiatives such as the W3C Linking Open Data Project,1 a tremendous amount of structured knowledge is publicly available as language-independent Knowledge Graphs. These Knowledge Graphs cover a large range of domains, under the form of billions of triples –Subject, Property, Object. The following table shows a Knowledge Graph consisting of six sample triples related to Gustave Eiffel, representing information about his birth, his death, his nationality and his professional activity.

|

Subject |

Property |

Object |

|

|

Triple 1 |

Gustave Eiffel |

birthDate |

15 December 1832 |

|

Triple 2 |

Gustave Eiffel |

birthPlace |

Dijon |

|

Triple 3 |

Gustave Eiffel |

deathDate |

27 December 1923 |

|

Triple 4 |

Gustave Eiffel |

deathPlace |

Paris |

|

Triple 5 |

Gustave Eiffel |

country |

France |

|

Triple 6 |

Gustave Eiffel |

architect |

Eiffel Tower |

The Knowledge Graph above is the “semantic” version of the infoboxes as found in Wikipedia (shown on the left), that is, the structured representation of the knowledge that lies behind these infoboxes.2 The Linked Open Data cloud3 currently contains over a thousand of interlinked datasets (e.g. DBpedia or Wikidata4). Such a formal knowledge representation allows for applying powerful algorithms which have proved crucial in fields such as Question Answering.

In V4Design, the knowledge about the modelled buildings and objects is also stored under the form of triples. When a 3D model is produced from a video or a set of images, the first task is to identify the source asset (e.g. the Eiffel Tower). Once this is done, some relevant triples can be retrieved for instance from DBpedia. But DBpedia – and the Linked Open Data resources in general- are for now largely incomplete, and other sources of information need to be considered. Thus, triples are extracted directly from images, videos, Wikipedia pages, architecture reviews, etc., using state-of-the-art multimedia and text analysis techniques. Neither multimedia nor text analysis are trivial tasks. For example, the information that Gustave Eiffel built the Eiffel Tower is, surprisingly enough, not found in DBpedia (see Footnote 2). In this case, the information is mentioned in the corresponding Wikipedia article about Gustave Eiffel:

“He is best known for the world-famous Eiffel Tower, built for the 1889 Universal Exposition in Paris, and his contribution to building the Statue of Liberty in New York.“

However, note that it is not expressed directly that Eiffel built the tower, but instead that he is famous for it, from which, as humans, we can infer that he actually built the tower. Such inferences are particularly challenging for Natural Language Processing tools.

UPF‘s contents extraction pipeline from texts includes a series of tasks that need to be resolved sequentially (some intermediate tasks are omitted here):

- concept extraction: “best” is a single word concept, while “Eiffel Tower” is a multi-word concept;

- part-of-speech tagging and lemmatisation : “built” is a verb, and its base form is “build”;

- morphological tagging: “built” is in past tense;

- word sense disambiguation: “build” refers to a physical construction;

- entity disambiguation: “Paris” refers to the capital of France, not to Paris in Texas;

- cross-lingual generalisation of concepts: if the input text is in Spanish, “construir” in Spanish corresponds to the English “build”;

- sentence structure prediction: “build” modifies the noun “Eiffel Tower”;

- subject-property-object extraction: “Eiffel Tower” is the object of “build”, and by extension of the property “architect” (uses all the previously extracted information).

These tools are usually statistical components trained on large quantities of data annotated with the phenomena that need to be covered in the desired languages and domains. For some tasks such as the extraction of properties between the detected concepts, rule-based modules are more appropriate because they allow for more control and do not require annotated data (which is scarce). Concept extraction, cross-lingual concept generalisation and property extraction are the modules for which the main advances are being made in V4Design.

Extracting information from text is one thing, but whenever structured data is available, the question of how to verbalise it arises. Remember that triples are being extracted from multiple sources, including multimedia sources, for which no corresponding text exists or has been identified by the V4Design pipeline. In order to make these contents accessible to the end users, they need to be “verbalised”, that is, transformed into natural texts. The following table contains two English texts that correspond exactly to the Knowledge Graph shown above.

|

Language |

Possible verbalisation in Natural Language |

|

English |

Gustave Eiffel is a French architect who was born on December 15th 1832 in Dijon, and died on December 27th in Paris. He built the Eiffel Tower. |

|

English |

The Eiffel Tower was designed by architect Gustave Eiffel. Eiffel was a French citizen born in Dijon on December 15th 1832. He died in Paris on December 27th. |

The task of structured data verbalisation is more important that it seems at first sight. Indeed, on the one hand, some of the vocabulary used in the triple repositories is far from being transparent: for instance, the property r1LengthF is used to depict the length in feet of the first runway of an airport. Without a proper explanation, it would be very difficult to understand the meaning behind the property. On the other hand, even if the meaning of all properties were understandable, the number or properties found on the Linked Open Data cloud is tremendous, and not all of them are informative in a particular context. Some selection of the triples needs to be performed in order to present only information relevant to the reader. Finally, some studies showed that people tend to prefer reading text than formal representations: do you prefer reading the contents as shown in the first or second table above?

In order to convert some triples into text, three main steps are being performed in V4Design:

- selection of the triples to be verbalised: out of the whole set of triples in a knowledge base, only select the relevant ones given a query.

- packaging of the triples: group the triples into sentences; without packaging, the generator would render each property as an independent sentence (e.g. “Eiffel was born on December 15th 1832. Eiffel was born in Dijon.”).

- linguistic realisation: for each sentence, this module performs the main following actions: (i) definition of sentence structure starting from the Knowledge Graph; (ii) introduction of language-specific words; (iii) resolution of word orders and morphological interactions.

Pre-generated sentences with slots to be filled and fully statistical approaches are currently popular for these tasks, but have the problems of not being scalable (templates) or not being reliable (statistical). The main approach followed by UPF in V4Design is the development of linguistically-informed rule-based systems that do not depend on annotated data and allows for the levels of flexibility and scalability required by the project. Some experiments carried out with state-of-the-art neural techniques are also applied for subtasks of (3), such as word order resolution.

Both the extraction of triples from text and the production of text from triples are becoming “hot topics” in the Natural Language Processing research. Recently only, as an indicator of this trend, the first international competitions have been organised for teams to develop systems based on common datasets: the WebNLG challenge in 20175 for verbalisation, and the Open Knowledge Extraction challenge in 2018.6 Building standard datasets is crucial, since it will allow for developing more comparable and reusable tools. In the framework of V4Design, UPF is also contributing to the development of standard resources, through the organisation of the Surface Realisation shared tasks of 20187 and 20198 (covering all subtasks of linguistic realisation), and the upcoming WebNLG challenge in 2020, which will offer, for the first time, both extraction and verbalisation tasks on the same dataset.

To know more: simon.mille@upf.edu, montserrat.marimon@upf.edu, leo.wanner@upf.edu